论文 BARON首先对上下文相关的区域进行采样,形成一个“袋子”。由于区域建议网络(RPN)被证明可以覆盖潜在的新对象,我们探索了一种邻域抽样策略,对 region proposals 周围的 box 进行抽样,以帮助建模一袋视觉概念的共同出现。

其次,BARON通过将区域特征投影到词嵌入空间中,并使用冻结的VLM的文本编码器(TE)对这些伪词进行编码,从而获得区域袋嵌入。BARON将区域特征投射到伪词上,自然使TE能够有效地表示共现语义概念,理解整个场景。为了保留区域框的空间信息,BARON将框形和框中心位置投影到嵌入中,并添加到伪词中,然后将它们馈送给TE。

环境配置过程 readme里面给出的指导是用slurm_train训练,但是有如下报错

srun: error: Unable to allocate resources: Unable to contact slurm controller (connect failure)

尝试改用dist_train训练(但是readme里没有提到这个文件的训练方式

系统上的CUDA 10.0

CUDA驱动版本 12.4

$ nvcc --version //查看系统上的CUDA版本为10.0 nvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2018 NVIDIA Corporation Built on Sat_Aug_25_21:08:01_CDT_2018 Cuda compilation tools, release 10.0, V10.0.130

依次安装readme里提到的包

$ pip install openmim mmengine

mmcv安装前需先安装pytorch 下述为CUDA版本为10.0 对应的pytorch安装命令

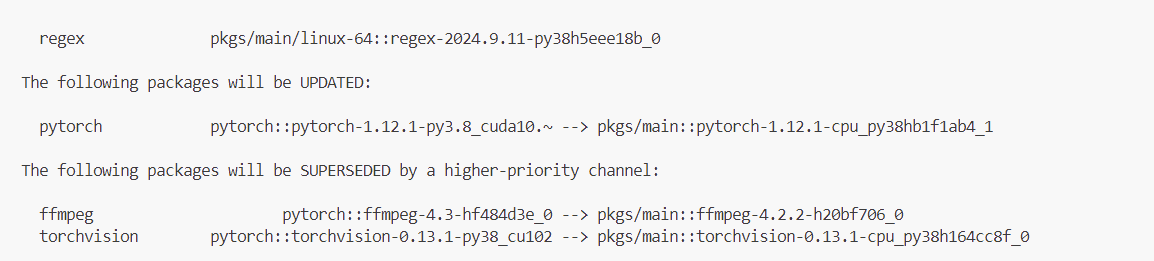

$ conda install pytorch==1.12.1 torchvision==0.13.1 torchaudio==0.12.1 cudatoolkit=10.2 -c pytorch

mmcv版本不能太高,否则会跟后续mmdet的要求冲突(例如出现如下报错:

$ mmdet 3.3.0 requires mmcv<2.2.0,>=2.0.0rc4; extra == "mim" , but you have mmcv 2.2.0 which is incompatible.

mmdet>=3.3要求的mmcv版本>=2.0.0 <2.2.0

$ mim install mmcv==2.0.0

$ pip install git+https://github.com/lvis-dataset/lvis-api.git //没成功,但是应该是lvis数据集的,可能用不上? $ mim install mmdet>=3.0.0rc6 //安装的3.3.0

readme没给出的包:ftfy

$ conda install ftfy $ conda install regex

运行训练出现报错

File "/home/anaconda3/envs/ovdet/lib/python3.10/importlib/__init__.py", line 126, in import_module return _bootstrap._gcd_import(name[level:], package, level) ImportError: libc10_cuda.so: cannot open shared object file: No such file or directory

问题

安装其他库的时候pytorch被更新成cpu的了

解决方案:

先安装包,然后重新下载一遍pytorch

之前以为clip模型是一样的,把/home/OVD/OADP-main/pretrained/clip/ViT-B-32.pt连接到checkpoints/clip_vitb32.pth,但是其实不能用,运行下述代码的时候忘记取消软连接了,/home/OVD/OADP-main/pretrained/clip/ViT-B-32.pt被改了

import clip import torch model, _ = clip.load("ViT-B/32") torch.save(model.state_dict(), 'checkpoints/clip_vitb32.pth')

todo:在oadp里用下面命令重新安装

python -c "import clip; clip.load_default()"

CUDA out of memory

减小lr:0.04 -》 0.001

减小batch:4 -》2

代码 可学习的prompt:在models/roi_heads/bbox_head.py里实现了一个BaronBBoxHead类,继承自mmedet的BBoxHead,如下是init里初始化prompt的部分,实现可学习的方法是给cls_embeddings加上一个bias

if cls_bias is None : self .cls_bias = 0.0 else : assert self .loss_cls.use_sigmoid, \ "cls_bias only used for sigmoid logits" self .cls_bias = nn.Parameter(torch.ones(1 ) * cls_bias) if cls_embeddings_path is not None : cls_embeddings = torch.from_numpy( np.load(cls_embeddings_path)).float () assert self .num_classes == cls_embeddings.shape[0 ] self .register_buffer('cls_embeddings' , cls_embeddings) self .learn_bg = False if bg_embedding == 'zero' : self .register_buffer('bg_embedding' , torch.zeros_like(cls_embeddings[:1 ])) elif bg_embedding == 'learn' : self .bg_embedding = nn.Linear(1 , cls_embeddings.shape[1 ]) self .init_cfg += [ dict ( type ='Xavier' , distribution='uniform' , override=dict (name='bg_embedding' )), ] self .learn_bg = True else : raise ValueError(f"{bg_embedding} not supported." )

训练时的调整在pred_cls_logits方法里

def pred_cls_logits (self, pseudo_words, clip_model ): text_encoder = clip_model.text_encoder if pseudo_words.shape[0 ] == 0 : return pseudo_words.new_zeros(0 , self .num_classes + 1 ) with autocast(): valid_mask = self ._drop_word(pseudo_words) pseudo_text, end_token_ids = text_encoder.prepare_pseudo_text_tensor( pseudo_words, valid_mask) if self .use_attn12_output: cls_features, _, _ = \ text_encoder.encode_pseudo_text_endk(pseudo_text, end_token_ids, text_pe=True , stepk=12 , normalize=True ) else : cls_features = \ text_encoder.encode_pseudo_text(pseudo_text, end_token_ids, text_pe=True , normalize=True ) if self .learn_bg: input_ones = pseudo_words.new_ones(1 , 1 ) bg_embedding = self .bg_embedding(input_ones) bg_embedding = F.normalize(bg_embedding, p=2 , dim=-1 ) else : bg_embedding = self .bg_embedding cls_embeddings = torch.cat([self .cls_embeddings, bg_embedding]) if self .training: cls_logits = self .cls_temp * cls_features @ cls_embeddings.T else : cls_logits = self .test_cls_temp * cls_features @ cls_embeddings.T if self .training and self .loss_cls.use_sigmoid: cls_logits += self .cls_bias assert cls_logits.shape[1 ] == self .num_classes + 1 return cls_logits